2025, the year I made hp-sim5

I spent 2025 simulating Hangprinter.

Before 2025 I envisioned giving normal people economically useful machines via the Hangprinter Project and its community, kind of like this:

The improvement loops were weak, this didn't work well enough. My updated understanding is that AIs will get robots first, before normal people gets them:

This is the way for every product or project. AI is powerful and it is not going away.

So I'm adapting the Hangprinter Project to the AI future. In 2024 I wanted to automate the whole Hangprinter Project, starting with support agents (see hp-support and Hangprinter Knowledge Hub). In 2025 my focus has shifted from "automate the Hangprinter Project" to "insert Hangprinter into the new age". AIs are not independently self-improving yet, so neither is Hangprinter.

But the intelligence explosion is still expanding, and whatever the AIs can't see, control, or optimize will soon be left in a category that has no name yet but will be described as unoptimized, hobbyist, micro-scale, handi-craft, uneconomical, manual, niche, old-fashioned, antique, part of History, slow, weak, hard, dumb, irrelevant, you get the idea.

What Does it Mean for Hangprinter to "Fit Into the AI Age"?

What we call AI can be often be described as "simulating an interesting process", most often based on process output examples, and then harvesting some artifacts, side-effects or breadcrumbs dropped from the simulation.

The most relevant AIs of 2024 simulated text generation, the process previously known as "writing". They can also simulate "thinking", "painting", "speaking", "trading", "driving", "walking" and so on. Even evolution and the weather are simulated all the time. We just call it "hw design" and "weather forecasts". Sometimes, if the simulations are very static, simple, or bare-bones we sometimes call them "optimization" or "statistical regressions".

So for Hangprinter to merge with the singularity we need to fully embrace the simulation paradigm. Complex simulations of complex processes. Making Hangprinter available to AI in 2025 simply meant building a simulation environment, and ignoring the physical parts of the project altogether.

Everything will be done in this simulation environment: The hardware, the software, the physics, the gcode execution. We can even imagine debugging market dynamics down to individual businesses and so on. Our physical machines and their outputs will simply be side effects from this perspective.

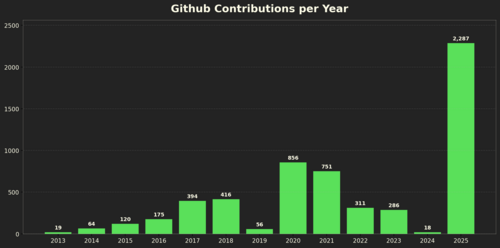

So I named the simulation environment "hp-sim" and went into the rabbit hole of creating it. My community engagement stats that were already declining since 2021 went to zero in 2025. No Twitter, no Youtube, no email, (almost) no blog posts.

The focus has paid off.

What Have I Delivered?

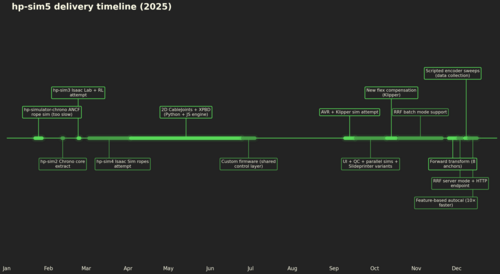

Simulating Hangprinter in 2025 is very hard. (In 2024 is was impossible, in 2027 it will probably be trivial.) The resulting hp-sim5 repo is called hp-sim5 because four attempts before it failed:

- hp-simulator-chrono (22 Jan, 2025 - 27 Jan, 2025). I invented ANCF rope simulation in here. Interesting, maybe even novel and realistic to model a line as one or a few thin weak beams in parallel, with separately tunable bending stiffness and axial (pull) stiffness. Friction between strands add bending resistance. However, way too slow for realtime. RIP.

- hp-sim2 (Feb 11, 2025 - Feb 12, 2025). An attempt to extract a core of Chrono simulator into modern C++ to speed things up. RIP.

- hp-sim3 (Feb 23, 2025). An attempt to use a template for Isaac Lab projects, and shoe-horn Hangprinter in there to get reinforcement learning (RL) control going. Failed because the environment didn't have any primitives for ropes/lines/cables. RIP

- hp-sim4 (Mar 3, 2025 - Apr 2, 2025). An attempt to build ropes in Isaac Sim (the physics environment that Isaac Lab uses). Failed because the physics engine was too heavily geared towards rigid bodies, and good lines could not be contructed as chains of rigid bodies. RIP

Luckily, hp-sim4 led me to Mattias Müller's work on rope simulations. First his long distance joints and later his XPBD and CableJoints, which was performant and physically accurate cable simulations. Finally, exactly what hp-sim needed.

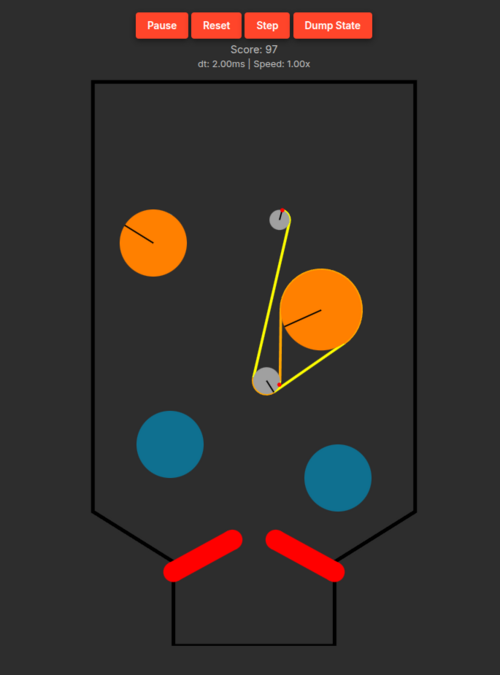

No open source implementations of CableJoints existed, so for ca two and a half months (Apr 3, 2025 - Jun 24, 2025), I implemented a 2d version of a CableJoints and an XPBD physics engine. Inputs, outputs, rendering, double implementations in Python and Javascript, CI testing, user interaction, constraints, friction, etc. End-to-end, specialized to our needs.

Then, with the Slideprinter modelled, lines and all, it needed control software. We needed a 3d printer firmware inside the simulator. I spent 10 days implementing a custom firmware that work both with the Python and the Javascript CableJoint libraries.

I knew I needed real firmware though, so after Summer (September 9 and one week onwards) I tried to simulate a realtime AVR processor on my desktop machine and run Klipper on it. I could not reliably simulate a realtime chip on my non-realtime linux kernel. No matter how much buffering and other synchronization I added, I could never make that combo deterministic. I even installed a realtime version of the linux kernel on my desktop before finally backing off from the idea of simulating the whole AVR processor.

Klipper already had rudimentary Hangprinter support and good tools for running or debugging on host. So adding basic Klipper firmware control via "batch dump files", basically just long lists of motor commands and time stamps, was very easy. I spent ca 1 month improving the user interface, adding different Slideprinter designs, implementing quality control, support for parallel simulations etc.

The quality control made it possible to track if a firmware change improved print quality or not, so it was time to improve firmware. I spent one weeks developing two brand new flex compensation algorithms and implementing them into Klipper (Oct 10, 2025 - Oct 17, 2025). It's way better than the legacy flex compensation in every way. Details here: a Klipper PR.

Now, Klipper is great but I didn't want it to be the only firmware in hp-sim. I wanted hp-sim to support ReprapFirmware as well. However, ReprapFirmware had no support for running on host (desktop machines) or dumping lists of time stamped motor commands. I wrote a roadmap and went on to spend a full month on adding ReprapFirmware batch mode support (Oct 19, 2025 - Nov 20, 2025).

With the new flex compensation algorithms, both Klipper and ReprapFirmware almost supported 8 anchors. They just lacked a proper forward transform. So I spend one week (Nov 25, 2025 - Dec 1, 2025) implementing a forward transform thats way way superior in every way, compared to what was available before.

On Dec 1 2025 Klipper got the remaining parts of Hangprinter support that only ReprapFirmware had before (buildup compensation, block-and-tally style gearing, etc).

At this point I charged ahead with improving auto calibration. Hangprinter has had a kind of working auto calibration since 2017, but it had many problems. Ironically, it was labor intensive and kind of unreliable.

Auto calibration is a dynamic process where one gcode is sent, then some time passes, physics happens (is simulated), a measurement is done and handled, then some more lines of gcode etc. Clearly, the "batch mode" dumps of motor commands I had been using did not suffice for developing auto calibration.

I spent four days (Dec 2, 2025 - Dec 5, 2025) implementing a "server mode" that let's the host version of ReprapFirmware run idle and wait for gcodes sent to its http endpoint (I also built the whole http server).

One of the problems of the old autocal was that it could not collect good data automatically. So I spent three days (Dec 7, 2025 - Dec 9, 2025) scripting the interactions (sending gcode, wait, read reply etc) for collecting structured encoder data from a large area of a non-calibrated Slideprinter (or later also Hangprinter++).

Another one of the problems of the old autocal was that it was too slow so I developed a new idea for autocalibration on Dec 9 and spent seven days (Dec 10, 2025 - Dec 16, 2025) implementing it and comparing it to the previous autocal method. The results so far are promising, the new feature based method runs 10x faster and has the same accuracy as the (new improved version of the) old method. The new method also succeeds every time, while the old one often needs a couple of tries. Which one will perform best in 3d and with more diverse setups remains to be seen.

Stop and Breathe

All of this work, from Jan 22 to today (Dec 17) was greatly sped up by various AI tools but also by the simulation itself. Being able to test things right in the simulation has made experiments 10x cheaper, faster, and more pleasant. I've literally run hundreds of experiments that helped my understanding of Hangprinter's software and mechanics, each of which would have either been impossible or taken at least one day to do physically.

Where is HP5?

Still left to do is:

- Upgrading all of hp-sim from 2d to 3d.

- Implementing spool simulation that includes realistic line buildup.

- An actual physical HP5 design, with BOM, suggested layouts and verification of various design decisions in simulation.

- A hp-sim mode to conduct batches of parallel experiments.

- Support for rotational degrees of freedom. (6dof)

If you want you can go and play with the simulation here Try clicking around. Many UI details are undocumented. I'm especially not proud of "Trace enabled, then triple right-click". Some instructions on how to use the simulation is scattered around the repo.

I'm including prompts and responses from the hp-sim5 repo here.

- tobben